Evaluate and improve your RAG with our metrics

Boost accuracy of your LLM app with foresight.

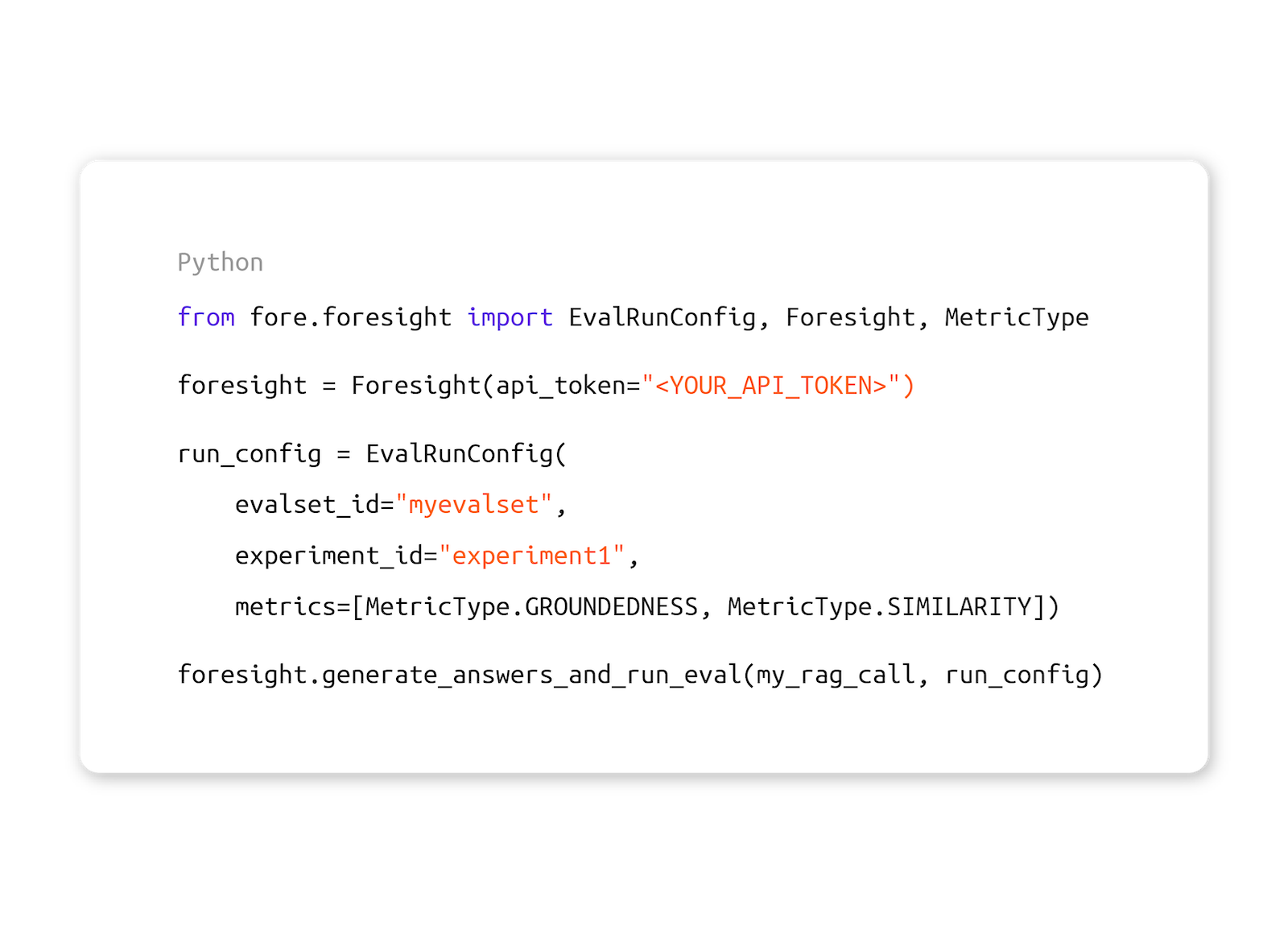

SETUP

Plug foresight into your code with a couple of lines.

MEASURE

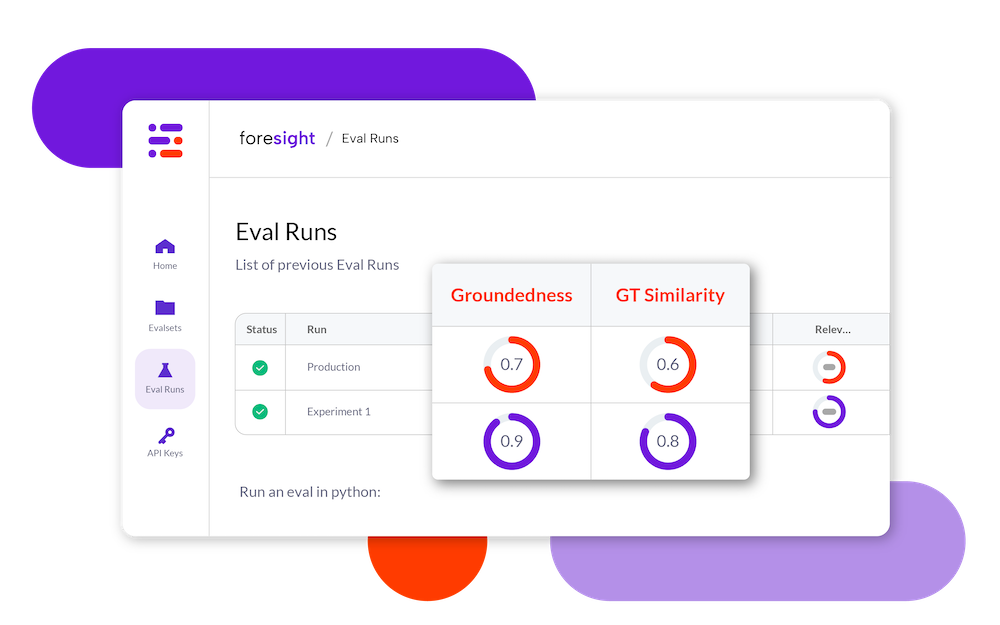

Run your evaluation in foresight using foreai’s metrics:

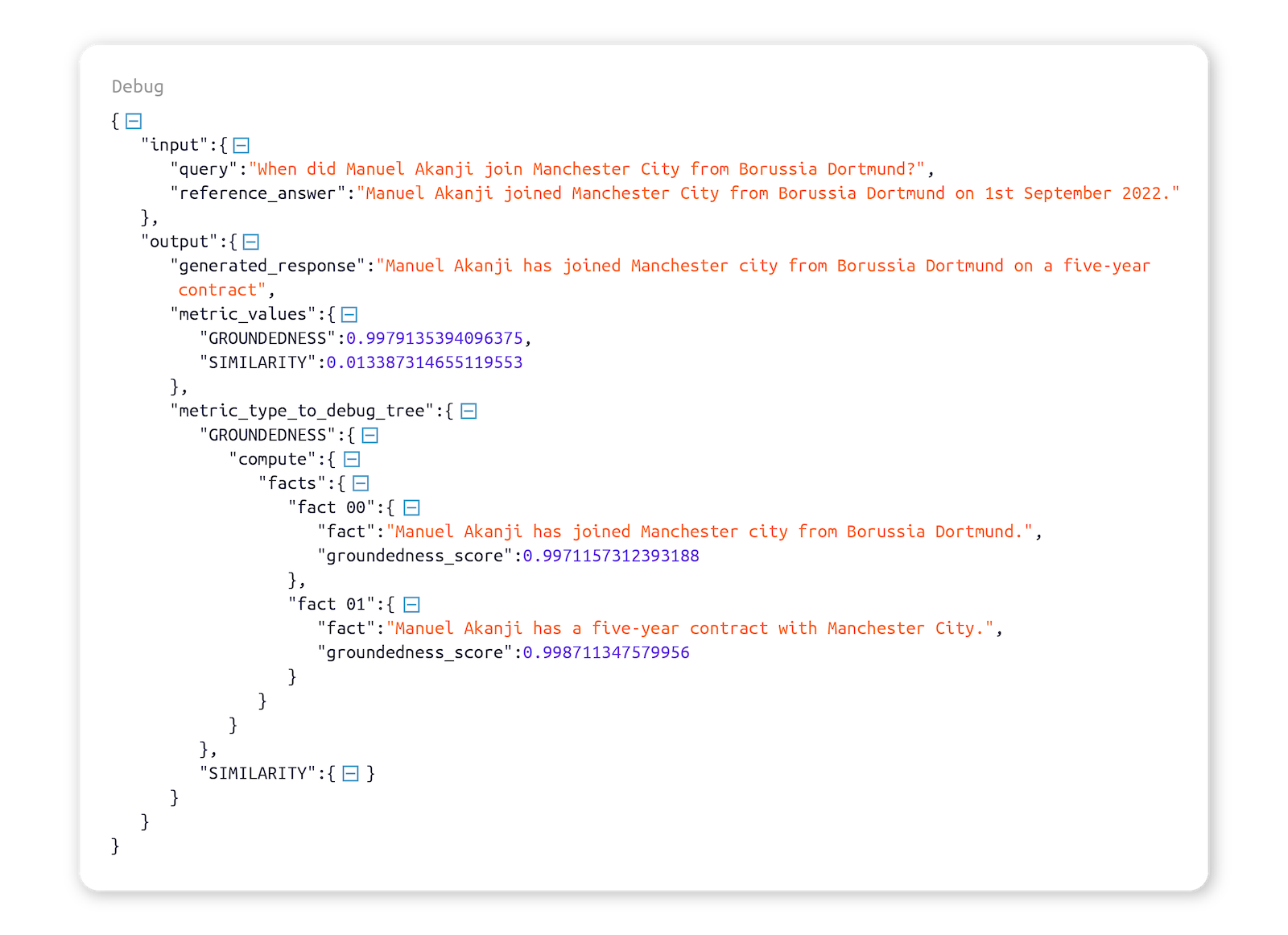

- Groundedness score: Is the response based on the context and nothing else?

- Ground Truth Similarity: Are the responses semantically equivalent to the ground truth?

More coming soon.

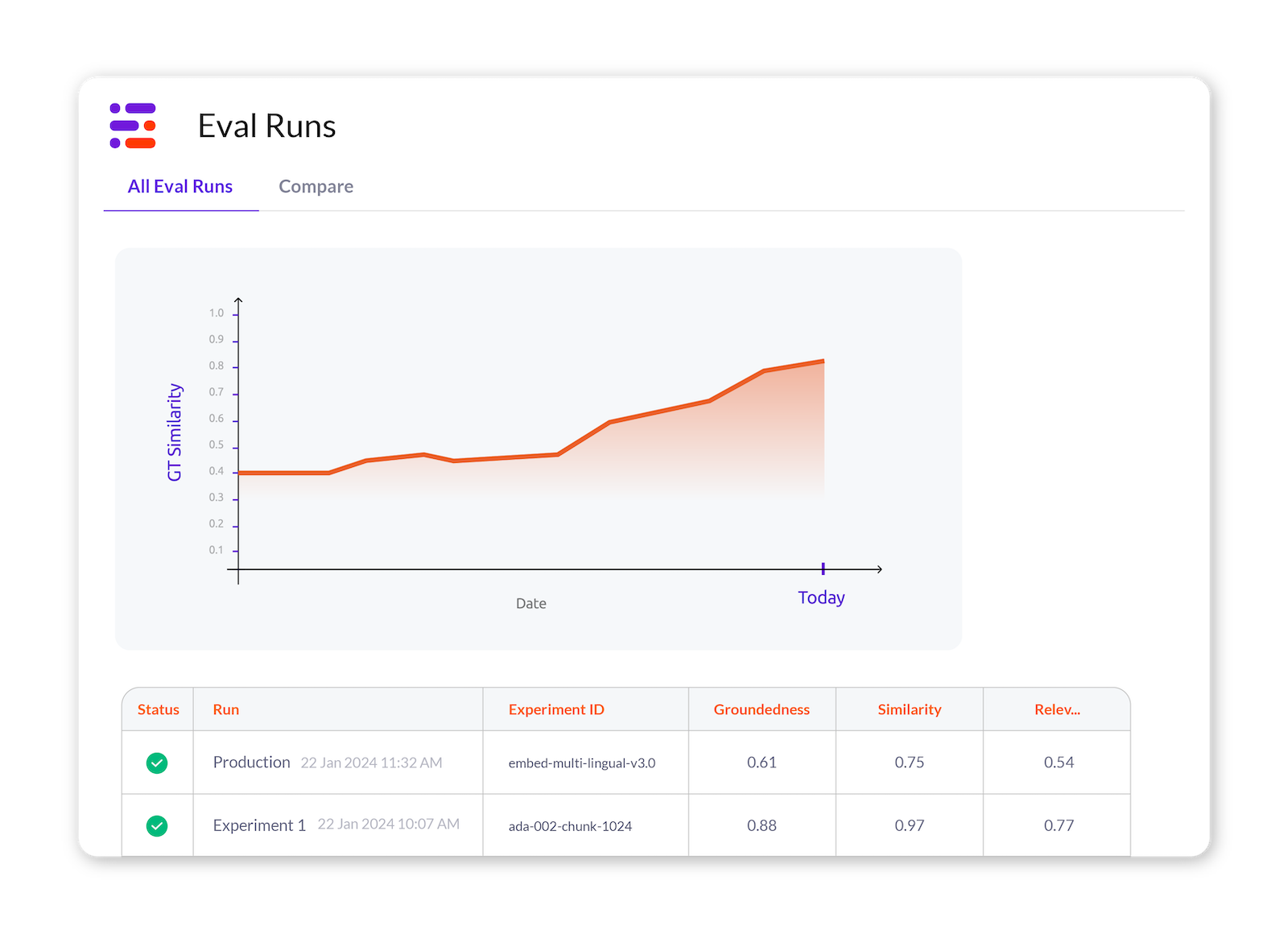

OBSERVE

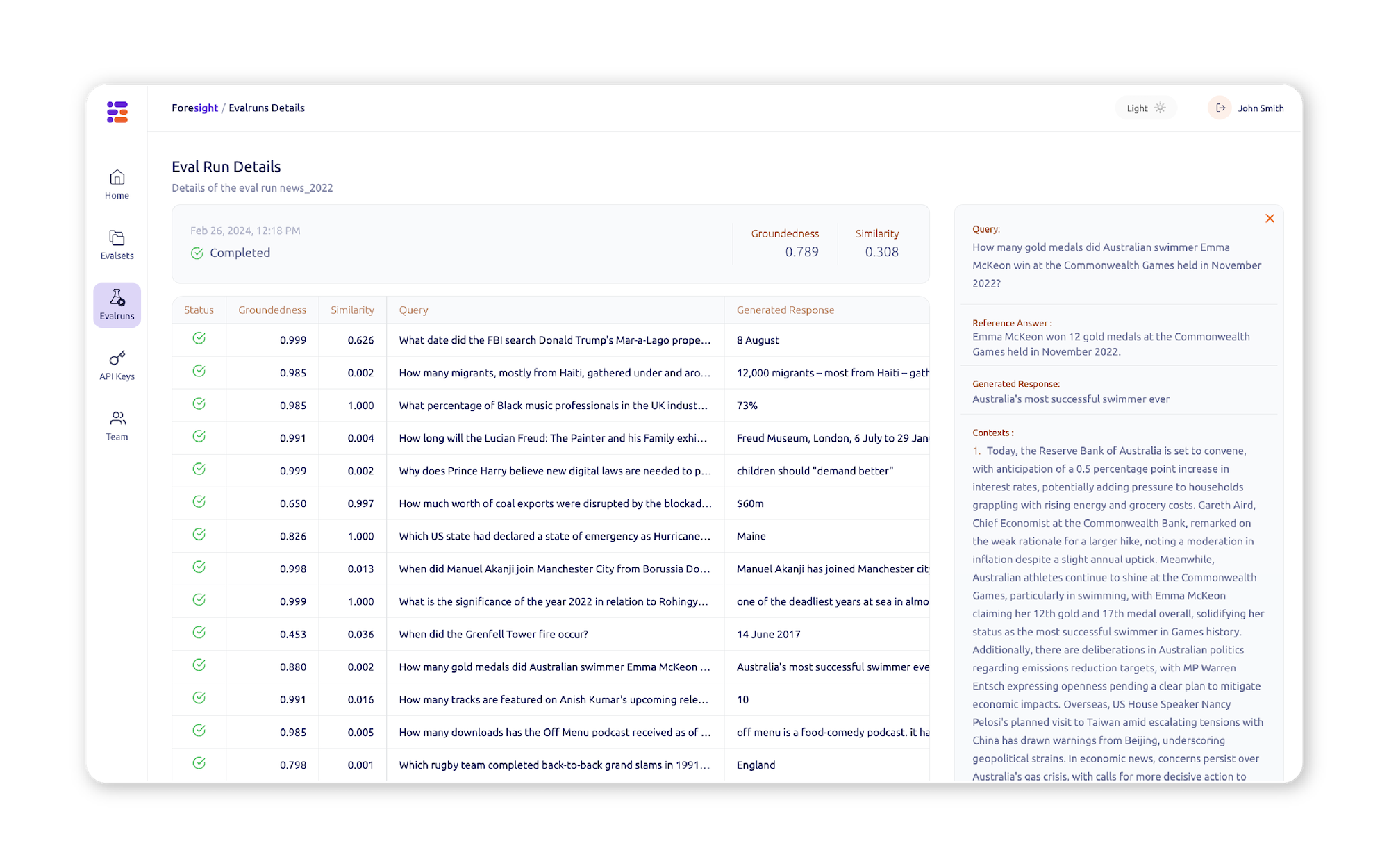

Observe and debug the performance of your LLM app.

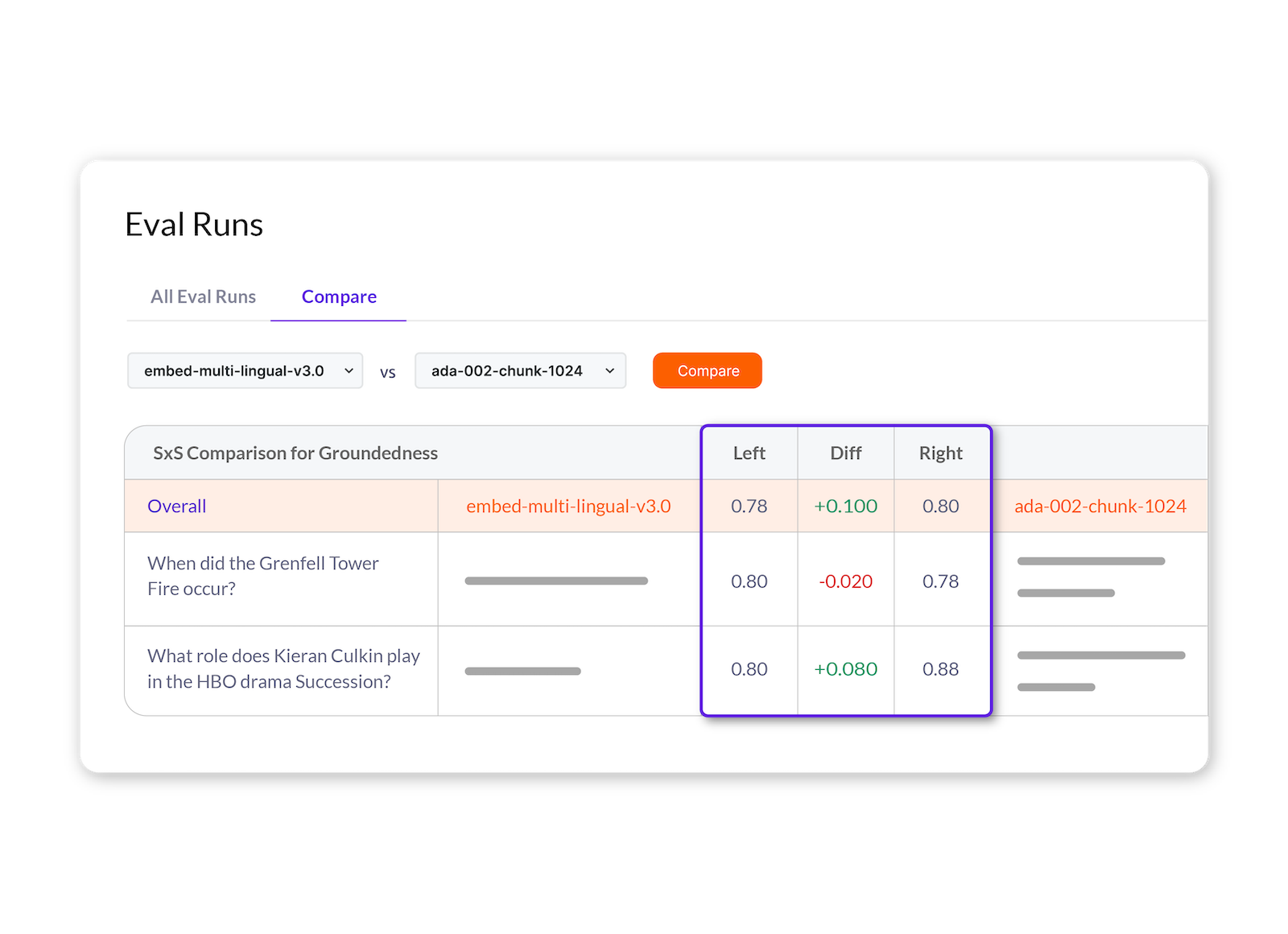

COMPARE

Compare your evaluations and drill deeper.

IMPROVE & ITERATE

Improve your RAG and build a high performing LLM app.

Measure & Improve LLM product performance. before & after launch.

APPLICABLE IN A VARIETY OF LLM APPLICATIONS

Higher accuracy leads to higher customer satisfaction

.png?width=1200&length=1200&name=chatbot_examples_report_800px%20(1).png)

Basic

Free

-

Up to 1’000 evaluated queries per month

-

1 seat per company

-

Basic support

-

No credit card required

-

All platform features

Enterprise

-

Unlimited volume

-

Unlimited seats

-

Premium support & custom setup

-

Hosted or self-hosted deployment

-

RELATED CONTENT

The year of AI quality hill-climb

After having seen the potential of AI in the “It works!” demos, and realizing that there is a lot of work between the demo and the production system, we foresee that in 2024 companies are going to need to get started to climb that hill of AI accuracy.